SPECsfs2008_nfs.v3 Result

|

NEC Corporation

|

:

|

NV7500, 2 node active/active cluster

|

|

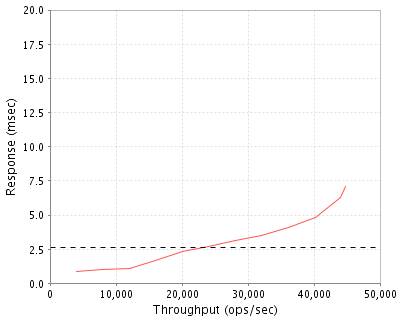

SPECsfs2008_nfs.v3

|

=

|

44728 Ops/Sec (Overall Response Time = 2.63 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

3996

|

0.9

|

|

7998

|

1.0

|

|

12013

|

1.1

|

|

16023

|

1.7

|

|

20033

|

2.3

|

|

24078

|

2.7

|

|

28090

|

3.1

|

|

32119

|

3.5

|

|

36160

|

4.1

|

|

40191

|

4.8

|

|

43979

|

6.3

|

|

44728

|

7.1

|

|

|

Product and Test Information

|

Tested By

|

NEC Corporation

|

|

Product Name

|

NV7500, 2 node active/active cluster

|

|

Hardware Available

|

January 2010

|

|

Software Available

|

January 2010

|

|

Date Tested

|

January 2010

|

|

SFS License Number

|

9006

|

|

Licensee Locations

|

Tokyo,Japan

|

NV7500 is a high-end 2node active/active cluster model of NEC Storage NV series. The NEC Storage NV series is a Network Attached Storage (NAS) product that connects to a network and enables file access via the network. It supports file access protocols, which enables files located in different operating systems to be shared. Centralized control of data maintenance or other processing is also possible by storing shared data together.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

1

|

Server

|

NEC

|

NF7675-SR20

|

NV7500 2node Cluster NAS server including 1 Disk Array Controller and 3 SAS Disk

|

|

2

|

2

|

NIC

|

NEC

|

NF7609-SN012

|

NV7500 2port Network Interface card(Copper)

|

|

3

|

2

|

NIC

|

NEC

|

NF7609-SN122

|

NV7500 Additional 2port Network Interface card(Copper)

|

|

4

|

1

|

NVRAM

|

NEC

|

NF7606-SV01

|

NV7500 NVRAM option(2 cards, 1 per node)

|

|

5

|

2

|

Memory

|

NEC

|

NF7606-SC011

|

NV7500 Additional Main Memory(4GB)

|

|

6

|

5

|

Disk Array Controller

|

NEC

|

NF7607-SA01

|

NV7500 Additional Disk Array Controller(It can include up to 12 disks.)

|

|

7

|

18

|

Enclosure

|

NEC

|

NF7609-SE60

|

NV7500 SAS/SATA Disk Enclosure(It can include up to 12 disks.)

|

|

8

|

284

|

SAS Disk

|

NEC

|

NF7609-SM625

|

SAS 300GB 15K RPM disk drive

|

|

9

|

2

|

FC Switch

|

NEC

|

NF9330-SS014

|

WB 330 4Gbps Fibre Channel Switch(16port)

|

|

10

|

1

|

Software License

|

NEC

|

UFS600-HC75002

|

BaseProduct SC-LX6 - NV7500(5TB)

|

|

11

|

1

|

Software License

|

NEC

|

UFS600-HZ750A3

|

BaseProduct SC-LX6 - NV7500(additional 10TB)

|

|

12

|

1

|

Software License

|

NEC

|

UFS602-HC75000

|

NFS Option - NV7500

|

Server Software

|

OS Name and Version

|

SC-LX V6.2

|

|

Other Software

|

N/A

|

|

Filesystem Software

|

SC-LX V6.2

|

Server Tuning

|

Name

|

Value

|

Description

|

|

[Manage] - [Volume] - 'Update access time(atime)'

|

disable

|

Do not update access time.

|

|

[Manage] - [NFS] - [Exports] - 'Check subtree(subtree_check)'

|

disable

|

Do not perform subtree check.

|

|

[Manage] - [NFS] - [Exports] - 'Squashed Users'

|

no_root_squash

|

Specifies a user to be converted into an Anonymous user. Do not convert user IDs.

|

Server Tuning Notes

All the other options were left unchanged from their default values.

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

SAS 147GB 15K RPM Disk Drives

|

3

|

133.1 GB

|

|

SAS 300GB 15K RPM Disk Drives

|

284

|

6.1 TB

|

|

Total

|

287

|

6.2 TB

|

|

Number of Filesystems

|

24

|

|

Total Exported Capacity

|

6226.5GB

|

|

Filesystem Type

|

SXFS

|

|

Filesystem Creation Options

|

4-GB Journal size,noatime,quota disable

|

|

Filesystem Config

|

N/A

|

|

Fileset Size

|

5616.9 GB

|

12 filesystems were created and used per node. One of 24 filesystems consisted of 8 disks which were divided into two 4-disk RAID 1+0 pools, and each of the other 23 filesystems consisted of 12 disks which were divided into two 6-disk RAID 1+0 pools. There were 6 Disk Array Controllers. One Disk Array Controller controlled 47 disks, and each one of the other 5 controlled 48 disks. Three 147 GB disk drives are included in the enclosure attached to the Disk Array Controller which is part of the product NF7675-SR20. They are solely used to constitute several filesystems for the operating system. Two of the three are used to form a RAID1 pair on which the operating system file systems are created, and the other is kept as a "hot" spare disk.

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

Jumbo Gigabit Ethernet(Copper)

|

8

|

Jumbo Frame,balance-alb

|

Network Configuration Notes

The number of ports was 4 per node. Bonding(balance-alb) was applied to the 4 ports on each node. All Gigabit network interfaces were connected to a Cisco Catalyst4948 switch.

Benchmark Network

The MTU size of 9000 was set for all connections to the switch. Each LG was connected to the network via a single 1 Gigabit Ethernet port.

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

2

|

CPU

|

Intel Xeon 5450, 3.0GHz, 4 cores, 1 chip, 4 cores/chip, Primary Cache 32KB(I)+32KB(D)on chip, Secondary Cache 12MB I+D on chip per chip, 6 MB shared/ 2 cores

|

Networking,NFS protocol,SXFS filesystem

|

Processing Element Notes

Each node has 1 physical processor.

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

Main memory

|

12

|

2

|

24

|

V

|

|

NVRAM

|

0.5

|

2

|

1

|

NV

|

|

Disk Array Controller Memory

|

4

|

6

|

24

|

NV

|

|

Grand Total Memory Gigabytes

|

|

|

49

|

|

Memory Notes

NVRAM Type is DIMM on PCI express cards, and that has 3-day battery.

Stable Storage

[Cluster configuration]: SUT has two controllers called "nodes". Both of the two nodes can be used for service forming an active/active cluster, or it is possible to let one node provide service and the other wait as a backup node forming an active/stand-by cluster. The nodes periodically communicate with each other forming a cluster "heart beat" via the following paths: 1) special direct LAN connection between the nodes, 2) LAN ports used for GUI/command operation by administrators, 3) Disks, and 4) serial(RS232-C) direct connection. Basically, each node is continuously sending some type of signal depending on the media. For example, in 3), one node is writing certain data to a disk, while the other is reading it. When a hardware or software failure occurs in one node, the other immediately detects it because expected communication from the other is missing. In that case, the surviving node takes over the original node's service. Before doing so, however, the surviving node makes sure that the other is really down, by explicitly ordering the other to shut-down. This is done via 1), 2) and via direct connection to the other node's BMC, in this order. [Battery-backed NVRAM]: Data is backed up in the battery-backed NVRAM in order not to lose data when failures occur, including power failures. Incoming data is written to NVRAM if there is space in it, or to the disk area. NVRAM is mirrored to both nodes, via Infiniband. It is only after data has been written to both node's NVRAM, or data has been written to the disk of the node servicing the data, that a success status is returned to the client. NVRAM is backed up by a 3-day battery implemented in NV7500. [Hardware redundancy]: Apart from cluster redundancy in cluster models such as SUT, all major hardware components, including power-input units and fans, have redundancy in the NV series.

System Under Test Configuration Notes

Measurement was conducted in an independent network( in terms of both Ethernet and Fibre Channel).

Other System Notes

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

12

|

NEC

|

Express5800/120Rh-1

|

Workstation with 2GB RAM and Linux operating system(RHEL5.2)

|

|

2

|

1

|

Cisco

|

Catalyst 4948-10GE

|

Cisco Catalyst 4948-10GE Ethernet Switch

|

Load Generators

|

LG Type Name

|

LG1

|

|

BOM Item #

|

1

|

|

Processor Name

|

Intel Xeon E5205

|

|

Processor Speed

|

1.86GHz

|

|

Number of Processors (chips)

|

1

|

|

Number of Cores/Chip

|

2

|

|

Memory Size

|

2 GB

|

|

Operating System

|

Linux 2.6.18-92.el5(RHEL5.2)

|

|

Network Type

|

Intel 80003ES2LAN Onboard Gigabit Ethernet(Copper) X 1

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

NFS V3

|

|

Number of Load Generators

|

12

|

|

Number of Processes per LG

|

24

|

|

Biod Max Read Setting

|

2

|

|

Biod Max Write Setting

|

2

|

|

Block Size

|

AUTO

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..12

|

LG1

|

N1

|

F1,F2,...,F24

|

N/A

|

Load Generator Configuration Notes

All filesystems were mounted on all clients, which were connected to the same physical and logical network.

Uniform Access Rule Compliance

Filesets were uniformly distributed over 24 filesystems. Load was uniformly distributed over network ports using the balance-alb feature.

Other Notes

Config Diagrams

Generated on Mon Sep 13 11:10:23 2010 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 12-Aug-2010