SPEC SFS®2014_database Result

Copyright © 2016-2019 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_database ResultCopyright © 2016-2019 Standard Performance Evaluation Corporation |

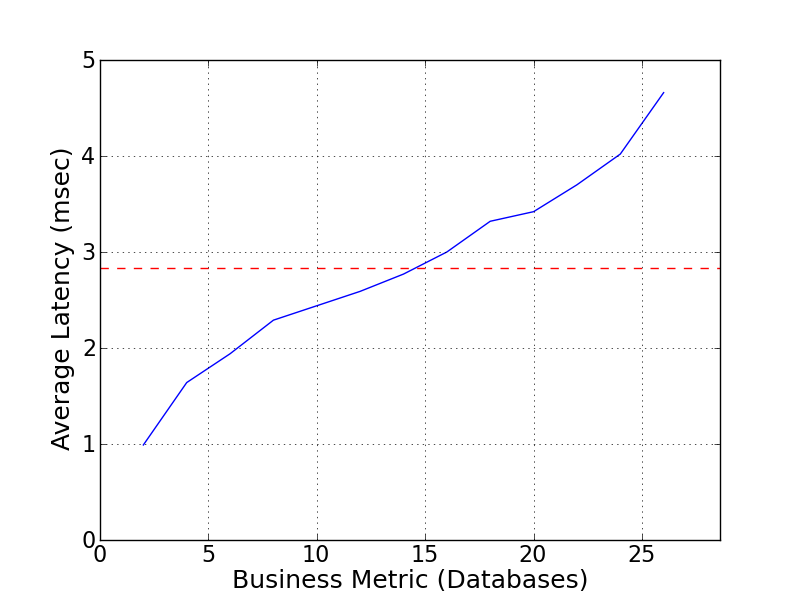

| SPEC SFS(R) Subcommittee | SPEC SFS2014_database = 26 Databases |

|---|---|

| SPEC SFS(R) 2014 ITM-33 Reference Solution | Overall Response Time = 2.83 msec |

|

|

| SPEC SFS(R) 2014 ITM-33 Reference Solution | |

|---|---|

| Tested by | SPEC SFS(R) Subcommittee | Hardware Available | September 2014 | Software Available | September 2014 | Date Tested | September 2014 | License Number | 0 | Licensee Locations | Gainesville, VA USA |

The SPEC SFS(R) 2014 Reference Solution consists of a TDV 2.0 micro-cluster

appliance, based on Intel Avoton SoC nodes, connected to an ITM-33 storage

cluster using the NFSv3 protocol over an Ethernet network.

The ITM-33

cluster, built on proven scale-out storage platform, provides IO/s from a

single file system, single volume. The ITM-33 accelerates business and

increases speed-to-market by providing scalable, high performance storage for

mission critical and highly transactional applications. In addition, the single

filesystem, single volume, and linear scalability of the BSD based operating

system enables enterprises to scale storage seamlessly with their environment

and application while maintaining flat operational expenses. The ITM-33 is

based on enterprise-class 2.5" 10,000 RPM Serial Attached SCSI drive

technology, 1GbE Ethernet networking, and a high performance Infiniband

back-end. The ITM-33 is configured with 4 nodes in a single file system, single

volume.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 4 | Storage Cluster Node | Generic | ITM-33 | 4 ITM-33-2U-Dual-24GB-4x1GE-7373GB nodes configured into a cluster. |

| 2 | 1 | Micro-cluster Appliance | Generic | TDV 2.0 | An appliance with 32 nodes and an internal network switch. |

| 3 | 1 | Infiniband switch | Generic | 12200-18 | An 18 port QDR Infiniband switch. |

| 4 | 1 | Ethernet switch | Generic | DCS-7150S-64-CL | A 10 GbE capable network switch. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | ITM-33 Storage Nodes | Operating System | cluster FS Version 7.0.2.1 | The ITM-33 nodes were running cluster FS 7.0.2.1 |

| 2 | Filesystem Software License | cluster FS | cluster FS Version 7.0.2.1 | cluster FS 7.0.2.1 License |

| 3 | Load Generators | Operating System | CentOS 6.4 64-bit | The load generator nodes in the TDV 2.0 appliance with 32 nodes running CentOS 6.4 64-bit. Linux kernel 2.6.32-358.el6.x86_64 |

| Load generator internal cluster switch | Parameter Name | Value | Description |

|---|---|---|

| Port speed | 2.5 Gb | internal switch port speed |

The port speed on the internal switch for the load generators was set to 2.5Gb.

| None | Parameter Name | Value | Description |

|---|---|---|

| n/a | n/a | n/a |

No software tunings were used - default NFS mount options were used.

No opaque services were in use.

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | ITM-33 Storage Nodes: 600GB SAS 10k RPM Disk Drives | 16+2/2 Parity protected (default) | Yes | 96 |

| 2 | Load Generator: 80GB SATA SSD | None | No | 32 |

| Number of Filesystems | 1(IFS->NFSv3) | Total Capacity | 26 TiB | Filesystem Type | IFS->NFSv3 |

|---|

The file system was created on the ITM-33 cluster by using all default

parameters.

The file system on the load generating clients was created

at OS install time using default parameters with an ext4 file system.

The storage in the load generating clients did not contribute to the performance of the solution under test, all benchmark data was stored on the IFS file system and accessed via NFSv3.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 1 GbE ITM-33 Storage Nodes | 4 | ITM-33 front end Ethernet ports, configured with MTU=1500 |

| 2 | QDR Infiniband ITM-33 Storage Nodes | 4 | ITM-33 back end Infiniband ports, running at QDR |

| 3 | 10 GbE Load generator benchmark network | 1 | Load generator benchmark network Ethernet ports, configured with MTU=1500 |

All NFSv3 benchmark traffic flowed through the DCS-7150S-64-CL Ethernet

switch.

All load generator clients were connected to an internal switch

in the micro-cluster appliance via 2.5GbE. This internal switch was connected

to the 10 GbE switch.

The Infiniband network is part of the ITM-33

storage node architecture, and is not configured directly by the user. It

carries inter-node cluster traffic. Since it is not configurable by the user it

is not included in the switch list.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | DCS-7150S-64-CL Ethernet Switch | 10 GbE Ethernet load generator to storage interconnect | 52 | 47 | No uplinks used |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 32 | CPU | load generators | Intel Atom C2550 2.4GHz Quad-core CPU | load generator network, nfs client |

| 2 | 8 | CPU | ITM-33 storage nodes | Intel(R) Xeon(R) CPU E5-2620 v2 @ 2.4GHz Quad-core CPU | ITM-33 Storage node networking, NFS, file system, device drivers |

Each ITM-33 storage node has 2 physical processors with 4 cores and SMT

enabled.

Each load generator had a single physical processor.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| ITM-33 Storage Node System Memory | 24 | 4 | V | 96 |

| ITM-33 Storage Node Integrated NVRAM module with Vault-to-Flash | 0.5 | 4 | NV | 2 |

| Load generator memory | 8 | 32 | V | 256 | Grand Total Memory Gibibytes | 354 |

Each storage controller has main memory that is used for the operating system and for caching filesystem data. A separate, integrated battery-backed RAM module is used to provide stable storage for writes that have not yet been written to disk.

Each ITM-33 storage cluster node is equipped with an nvram journal that stores

writes to the local disks. The nvram has backup power to save data to dedicated

on-card flash in the event of power-loss.

The load generating clients

and all components between them and the storage cluster are not stable storage

and are not configured as such, and thus will comply with protocol requirements

for stable storage.

The system under test consisted of 4 ITM-33 storage nodes, 2U each, connected by QDR Infiniband. Each storage node was configured with a single 10GbE network interface connected to a 10GbE switch. There were 32 load generating clients, each connected to the same Ethernet switch as the ITM-33 storage nodes.

None

Each load generating client mounted the single file system using NFSv3. Because there were four ITM-33 nodes, the first eight clients mounted the file system from the first ITM-33 node, the next eight clients mounted the file system from the second ITM-33 node, and so on. The order of the clients as used by the benchmark was round-robin distributed such that as the load scaled up, each additional process used the next node in the ITM-33 cluster. This ensured an even distribution of load over the network and among the ITM-33 nodes.

None

None

Generated on Wed Mar 13 16:54:22 2019 by SpecReport

Copyright © 2016-2019 Standard Performance Evaluation Corporation