SPECsfs2008_cifs Result

|

Huawei Symantec

|

:

|

Oceanspace N8500 Clustered NAS Storage System

|

|

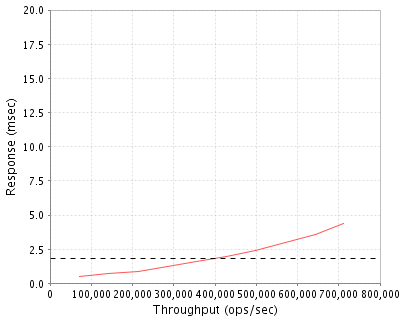

SPECsfs2008_cifs

|

=

|

712664 Ops/Sec (Overall Response Time = 1.81 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

71201

|

0.5

|

|

142149

|

0.7

|

|

213364

|

0.9

|

|

284738

|

1.2

|

|

356037

|

1.6

|

|

427781

|

2.0

|

|

498954

|

2.4

|

|

571026

|

3.0

|

|

644303

|

3.6

|

|

712664

|

4.4

|

|

|

Product and Test Information

|

Tested By

|

Huawei Symantec

|

|

Product Name

|

Oceanspace N8500 Clustered NAS Storage System

|

|

Hardware Available

|

March 2011

|

|

Software Available

|

March 2011

|

|

Date Tested

|

March 2011

|

|

SFS License Number

|

3726

|

|

Licensee Locations

|

The West Zone Science Park of UESTC,No.88,Tianchen Road,Chengdu

CHINA

|

The N8500 Clustered NAS Storage System is an advanced, modular, and unified storage platform (including NAS, FC-SAN, and IP SAN) designed for high-end and mid-range storage applications. Huawei Symantec fully owns the intelligent property right (IPR) of the Huawei Symantec Oceanspace N8500 Clustered NAS Storage System. Oceanspace N8500 is a professional integrated storage solution that optimally utilizes storage resources. Its superior performance, reliability, ease of management, and flexible scalability maximize its cross-field application potential to span areas such as high-end computing, databases, websites, file servers, streaming media, digital video surveillance, and file backup.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

8

|

Engine Node

|

Huawei Symantec

|

N8500

|

Oceanspace N8500 Clustered NAS Storage System

|

|

2

|

1

|

Fiber channel switch

|

HP

|

HP StorageWorks DC SAN Backbone Director

|

HP StorageWorks DC SAN Backbone Director 384*8G ports

|

|

3

|

18

|

Storage Controller

|

Huawei Symantec

|

S5600

|

Huawei Symantec Oceanspace N8500 Storage Unit---storage array Controller,4U hard disk frame,24 hard disks

|

|

4

|

54

|

Storage Expansion

|

Huawei Symantec

|

D200

|

Huawei Symantec Oceanspace N8500 Storage Unit---storage array Expansion,4U hard disk frame,24 hard disks

|

|

5

|

1728

|

Disk

|

Huawei Symantec

|

FC-450GB

|

450GB 15K RPM FC disk

|

Server Software

|

OS Name and Version

|

N8000 Clutsered NAS Engine Software V100R002

|

|

Other Software

|

Integrated Storage Management V100R003C01

|

|

Filesystem Software

|

VxFS

|

Server Tuning

|

Name

|

Value

|

Description

|

|

read_pref_io

|

32768

|

Preferred read request size.

|

|

noatime

|

on

|

Mount option added to all filesystems to disable updating of access times.

|

|

vx_ninode

|

3000000

|

Maximum number of inodes to cache.

|

|

write_throttle

|

1

|

The number of dirty pages per file.

|

Server Tuning Notes

None.

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

96 disks per storage unit (One S5600 + Three D200s), bound into 8 6+6 RAID-10 LUNs; every 18 LUNs, one from each storage unit, creates a filesystem with stripe depth of 18 and stripe size of 1024 KB; total eight filesystems are set up for this test.

|

1728

|

350.9 TB

|

|

Total

|

1728

|

350.9 TB

|

|

Number of Filesystems

|

8

|

|

Total Exported Capacity

|

196608 GB

|

|

Filesystem Type

|

VxFS

|

|

Filesystem Creation Options

|

Default

|

|

Filesystem Config

|

One file system was mounted and exported from each node.

|

|

Fileset Size

|

83213.1 GB

|

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

10 Gigabit Ethernet

|

8

|

There are 2 10GbE network ports per node, 1 port in use.

|

Network Configuration Notes

All clients connected to a Huawei Quidway S9303 10GbE switch.

Benchmark Network

An MTU size of 9000 was set for all connections.

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

16

|

CPU

|

Intel Six-Core Xeon Processor X5680 3.33GHz,12MB L2 cache

|

VxFS,CIFS,TCP/IP

|

|

2

|

36

|

CPU

|

Quad-Core AMD Opteron(tm) Processor 2372GHz,512KB L2 cache

|

block serving

|

Processing Element Notes

Each N8500 NAS Engine Node has two physical processors.

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

Clustered NAS Engine Nodes memory

|

96

|

8

|

768

|

V

|

|

S5600 storage controller provide write cache vault. When power failure is detected, a standby ups will kick in to hold the controller cache contents and controller will flush them back to internal disk. Data in the controller cache is guarantee stable more than 72 hours.

|

32

|

18

|

576

|

NV

|

|

Grand Total Memory Gigabytes

|

|

|

1344

|

|

Memory Notes

Each Storage Unit has dual storage controllers that work as an active-active failover pair.

Stable Storage

S5600 storage controller provide write cache vault. When power failure is detected, a standby ups will kick in to hold the controller cache contents and controller will flush them back to internal disk. Data in the controller cache is guarantee stable more than 72 hours.

System Under Test Configuration Notes

Other System Notes

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

8

|

IBM

|

X3850 X5

|

16G RAM and Red Hat Enterprise Linux Server release 5.3

|

|

2

|

1

|

Huawei

|

Huawei Quidway S9303

|

Huawei Quidway S9303 10 GbE 24-port switch

|

Load Generators

|

LG Type Name

|

IBM X3850 X5

|

|

BOM Item #

|

1

|

|

Processor Name

|

Intel(R)Xeon(R)CPU E7530

|

|

Processor Speed

|

1.87GHZ

|

|

Number of Processors (chips)

|

2

|

|

Number of Cores/Chip

|

6

|

|

Memory Size

|

16 GB

|

|

Operating System

|

Red Hat Enterprise Linux Server release 5.3

|

|

Network Type

|

10 Gigabit Ethernet

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

CIFS

|

|

Number of Load Generators

|

8

|

|

Number of Processes per LG

|

432

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..8

|

LG1

|

10 Gigabit Ethernet

|

/vx/_fs1$,/vx/_fs2$,/vx/_fs3$,/vx/_fs4$,/vx/_fs5$,/vx/_fs6$,/vx/_fs7$,/vx/_fs8$

|

N/A

|

Load Generator Configuration Notes

All filesystems were mounted on all clients.

Uniform Access Rule Compliance

Each client has all file systems mounted from each node.

Other Notes

There are 8 filesystems: fs1, fs2, fs3, fs4, fs5, fs6, fs7, fs8. All of them are cluster mounted on all the 8 server nodes. Each server node in the cluster exports one filesystem out of the 8 clustered filesystems. For example, node 1 exports fs1, node 2 exports fs2, and so on. SpecSFS clients connect to a network switch to gain access to all the 8 server nodes in the cluster, and map all 8 CIFS shares. Each specSFS client is able to do read/write IOs to all the 8 filesystems in a unified way with this setup.

Config Diagrams

Generated on Thu Apr 07 17:38:19 2011 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 06-Apr-2011