|

|

|---|

| Panasas, Inc. | : | Panasas ActiveStor Series 9 |

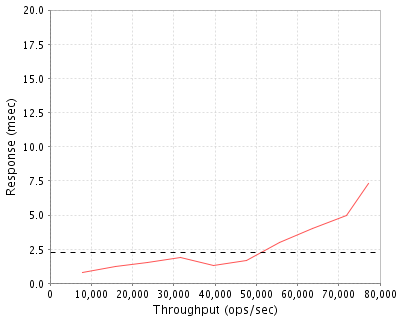

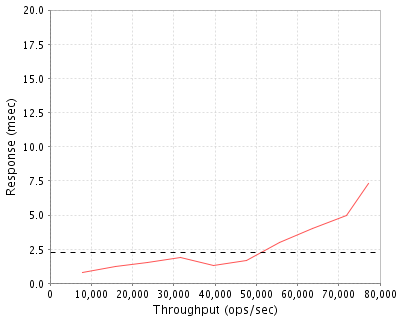

| SPECsfs2008_nfs.v3 | = | 77137 Ops/Sec (Overall Response Time = 2.29 msec) |

|

|

|---|

| Tested By | Panasas, Inc. |

|---|---|

| Product Name | Panasas ActiveStor Series 9 |

| Hardware Available | October, 2009 |

| Software Available | October, 2009 |

| Date Tested | December, 2009 |

| SFS License Number | 3866 |

| Licensee Locations | Fremont, California, USA |

The Panasas Series 9 NAS features a parallel clustered file system that turns files into smart data objects, and dynamically distributes and load balances data transfer operations across a networked blade architecture. The Panasas ActiveScale distributed file system creates a storage cluster with a single file system and single global namespace. Each self-contained shelf chassis houses up to eleven DirectorBlade and StorageBlade modules, two redundant network switch modules, two redundant power supplies, and a battery backup module. All components are hot-swappable.

As configured, each shelf includes 8 TB HDD storage, 256 GB SSD storage, 44 GB RAM, and dual 10 GbE network connectivity with the network failover option. Systems can be configured with any number of shelves to scale capacity and performance, in a single global namespace, with a single point of management.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 10 | Panasas Storage Shelf | Panasas, Inc. | 900027-004 | PAS 9, 3+8, 8TB + 256GB SSD, 32GB Cache, 10GE SFP+ |

| OS Name and Version | ActiveScale 3.5.0.c |

|---|---|

| Other Software | None |

| Filesystem Software | ActiveScale 3.5.0.c |

| Name | Value | Description |

|---|---|---|

| None | None | None |

None

| Description | Number of Disks | Usable Size |

|---|---|---|

| Each DirectorBlade has one 80 GB SATA 7200 RPM HDD that holds the ActiveScale OS, but not filesystem metadata or user data. | 30 | 2.3 TB |

| Each StorageBlade has one 1000 GB SATA 7200 RPM HDD that contains the ActiveScale OS, filesystem metadata, and user data. | 80 | 78.1 TB |

| Each StorageBlade has one 32 GB SSD that contains filesystem metadata and user data. | 80 | 2.5 TB |

| Total | 190 | 83.0 TB |

| Number of Filesystems | 1 |

|---|---|

| Total Exported Capacity | 74840 GB |

| Filesystem Type | PanFS |

| Filesystem Creation Options | Default |

| Filesystem Config |

The 10 shelves (80 StorageBlades) in the SUT were configured in a single bladeset, which is a fault-tolerant shared pool of disks. All 30 volumes in the file system share space in this bladeset. Each volume stripes data uniformly across all disks in the pool using Object RAID 1/5, which dynamically selects RAID-1 (mirroring) or RAID-5 (XOR parity) on a per-file basis.

The bladeset was configured with vertical parity enabled. Vertical parity corrects media errors at the StorageBlade level, before they are exposed to Object RAID, and provides protection equivalent to RAID-6 against a sector error during a RAID rebuild. |

| Fileset Size | 9256.5 GB |

| Item No | Network Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | Jumbo 10 Gigabit Ethernet | 10 | None |

Each Panasas shelf used a single 10 Gigabit Ethernet interface configured with MTU=9000 (jumbo frames). These links were connected to the Cisco Nexus 5020.

Four "Duo Node" ASUS servers (comprising eight nodes) and seven Penguin nodes, for a total of 15 client nodes, were used as load generators and connected via 10 GbE NICs to the Cisco Nexus 5020 switch. The 10 PAS9 shelves were also connected to the same Nexus 5020. There were no additional settings on the clients or the shelves, and the 25 ports were configured in a single VLAN on the Nexus 5020.

| Item No | Qty | Type | Description | Processing Function |

|---|---|---|---|---|

| 1 | 30 | CPU | Pentium-M 1.8 GHz | NFS Gateway, Metadata Server, Cluster Management, iSCSI, TCP/IP |

| 2 | 80 | CPU | Celeron-M 1.5 GHz | Object Storage File System, iSCSI, TCP/IP |

The SUT includes 30 DirectorBlades, each with a single Pentium-M 1.8 GHz CPU, and 80 StorageBlades, each with a single Celeron-M 1.5 GHz CPU. The DirectorBlades manage filesystem metadata and provide NFS gateway services. The StorageBlades store user data and metadata, and provide access to it through the OSD (Object Storage Device) protocol over iSCSI and TCP/IP. One DirectorBlade in the SUT was also running the Panasas realm manager and management user interface.

| Description | Size in GB | Number of Instances | Total GB | Nonvolatile |

|---|---|---|---|---|

| Blade main memory | 4 | 110 | 440 | NV |

| Grand Total Memory Gigabytes | 440 |

Each DirectorBlade and each StorageBlade has 4 GB ECC RAM.

Each shelf has two (redundant) power supplies as well as a battery, which powers the entire shelf for about five minutes in the event of AC power loss. In the event of a power failure, each blade saves cached writes from main memory to its local HDD before shutting down. The data saved in this way is maintained indefinitely while the system is powered down, and is automatically recovered when power is restored.

The SUT is comprised of 10 Panasas PAS9 shelves, with each shelf connected via 10 GbE SFP+ to a Cisco Nexus 5020 10 GbE switch. Each of the 15 load generators were connected to the Cisco Nexus 5020 with 10 GbE SFP+.

| Item No | Qty | Vendor | Model/Name | Description |

|---|---|---|---|---|

| 1 | 4 | ASUS | RS700D-E6/PS8 | ASUS "Duo Node" Linux server |

| 2 | 7 | Penguin Computing | Altus 1650SA | Penguin Linux server |

| 3 | 1 | Cisco | Nexus 5020 | Cisco 40-port 10 GbE Switch |

| LG Type Name | ASUS |

|---|---|

| BOM Item # | 1 |

| Processor Name | Intel Xeon E5530 |

| Processor Speed | 2.4 GHz |

| Number of Processors (chips) | 2 |

| Number of Cores/Chip | 4 |

| Memory Size | 1 GB |

| Operating System | CentOS 5.3 (2.6.18-128.el5) |

| Network Type | 1 Intel 82598EB 10 Gigabit NIC |

| LG Type Name | Penguin |

|---|---|

| BOM Item # | 2 |

| Processor Name | AMD Opteron 2356 |

| Processor Speed | 2.3 GHz |

| Number of Processors (chips) | 2 |

| Number of Cores/Chip | 4 |

| Memory Size | 1 GB |

| Operating System | CentOS 5.3 (2.6.18-128.el5) |

| Network Type | 1 Intel 82598EB 10 Gigabit NIC |

| Network Attached Storage Type | NFS V3 |

|---|---|

| Number of Load Generators | 15 |

| Number of Processes per LG | 30 |

| Biod Max Read Setting | 2 |

| Biod Max Write Setting | 2 |

| Block Size | AUTO |

| LG No | LG Type | Network | Target Filesystems | Notes |

|---|---|---|---|---|

| 1..1 | ASUS | N1 | F1 (GW1:/V16, GW2:/V16, ..., GW15:/V16; GW16:/V01, GW17:/V01, ..., GW30:/V01) | |

| 2..2 | ASUS | N1 | F1 (GW1:/V17, GW2:/V17, ..., GW15:/V17; GW16:/V02, GW17:/V02, ..., GW30:/V02) | |

| 3..3 | ASUS | N1 | F1 (GW1:/V18, GW2:/V18, ..., GW15:/V18; GW16:/V03, GW17:/V03, ..., GW30:/V03) | |

| 4..4 | ASUS | N1 | F1 (GW1:/V19, GW2:/V19, ..., GW15:/V19; GW16:/V04, GW17:/V04, ..., GW30:/V04) | |

| 5..5 | ASUS | N1 | F1 (GW1:/V20, GW2:/V20, ..., GW15:/V20; GW16:/V05, GW17:/V05, ..., GW30:/V05) | |

| 6..6 | ASUS | N1 | F1 (GW1:/V21, GW2:/V21, ..., GW15:/V21; GW16:/V06, GW17:/V06, ..., GW30:/V06) | |

| 7..7 | ASUS | N1 | F1 (GW1:/V22, GW2:/V22, ..., GW15:/V22; GW16:/V07, GW17:/V07, ..., GW30:/V07) | |

| 8..8 | ASUS | N1 | F1 (GW1:/V23, GW2:/V23, ..., GW15:/V23; GW16:/V08, GW17:/V08, ..., GW30:/V08) | |

| 9..9 | Penguin | N1 | F1 (GW1:/V24, GW2:/V24, ..., GW15:/V24; GW16:/V09, GW17:/V09, ..., GW30:/V09) | |

| 10..10 | Penguin | N1 | F1 (GW1:/V25, GW2:/V25, ..., GW15:/V25; GW16:/V10, GW17:/V10, ..., GW30:/V10) | |

| 11..11 | Penguin | N1 | F1 (GW1:/V26, GW2:/V26, ..., GW15:/V26; GW16:/V11, GW17:/V11, ..., GW30:/V11) | |

| 12..12 | Penguin | N1 | F1 (GW1:/V27, GW2:/V27, ..., GW15:/V27; GW16:/V12, GW17:/V12, ..., GW30:/V12) | |

| 13..13 | Penguin | N1 | F1 (GW1:/V28, GW2:/V28, ..., GW15:/V28; GW16:/V13, GW17:/V13, ..., GW30:/V13) | |

| 14..14 | Penguin | N1 | F1 (GW1:/V29, GW2:/V29, ..., GW15:/V29; GW16:/V14, GW17:/V14, ..., GW30:/V14) | |

| 15..15 | Penguin | N1 | F1 (GW1:/V30, GW2:/V30, ..., GW15:/V30; GW16:/V15, GW17:/V15, ..., GW30:/V15) |

Each of the 15 clients accessed all (30) gateways to 2 volumes (30 total) in the file system. In this way, all 30 volumes were accessed through all 30 gateways from these 15 clients. In all cases, each gateway accessed was serving a volume other than its primary volume, preventing any gateway/volume affinity in the file system.

Each Panasas DirectorBlade provides both NFS gateway services for the entire filesystem, and metadata management services for a portion of the filesystem (one or more virtual volumes). The system as tested contained 30 DirectorBlades and was configured with 30 volumes, one managed by each DirectorBlade.

To comply with the Uniform Access Rule (UAR) for a single namespace, each of the 15 clients accessed two volumes, and each volume was accessed through 15 NFS gateways. In all cases, the 15 NFS gateways used for a given volume were selected such that they did not contain the DirectorBlade providing metadata services for that volume. In this way, each client accessed all 30 gateways, all 30 volumes were accessed equally by the 15 clients, and no client was afforded any advantage by colocating the NFS gateway and metadata server for the volume being accessed.

The 80 Panasas StorageBlades were configured as a single bladeset (storage pool), so data from all 30 volumes was distributed uniformly across all StorageBlades. This results in uniform access from the clients to the storage, and assures that each server in the cluster serves the same amount of data traffic from each disk uniformly.

Generated on Fri Mar 05 12:44:03 2010 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 05-Mar-2010